Topics of this blog are related to multimedia communication. In particular, streaming of multimedia content within heterogeneous environments enabling Universal Multimedia Experience (UME).

Thursday, July 16, 2020

MPEG131 Press Release: Call for Proposals on Technologies for MPEG-21 Contracts to Smart Contracts Conversion

Friday, August 28, 2015

One Year of MPEG

- MPEG-21 - The Multimedia Framework

- MPEG-M - MPEG extensible middleware (MXM), later renamed to multimedia service platform technologies

- MPEG-V - Information exchange with Virtual Worlds, later renamed to media context and control

- MPEG-DASH - Dynamic Adaptive Streaming over HTTP

MPEG-M

The lessons learned from MPEG-21 was one reason why I joined the MPEG-M project as it was exactly the purpose to create an API into various MPEG technologies, providing developers a tool that makes it easy for them to adopt new technologies and, thus, new formats/standards. We created an entire architecture, APIs, and reference software to make it easy for external people to adopt MPEG technologies. The goal was to hide the complexity of the technology through simple to use APIs which should enable the accelerated development of components, solutions, and applications utilising digital media content. A good overview about MPEG-M can found on this poster.

MPEG-V

When MPEG started working on MPEG-V (it was not called like that in the beginning), I saw it as an extension of UMA and MPEG-21 DIA to go beyond audio-visual experiences by stimulating potentially all human senses. We created and standardised an XML-based language that enables the annotation of multimedia content with sensory effects. Later the scope was extended to include virtual worlds which resulted in the acronym MPEG-V. It also brought me to start working on Quality of Experience (QoE) and we coined the term Quality of Sensory Experience (QuASE) as part of the (virtual) SELab at Alpen-Adria-Universität Klagenfurt which offers a rich set of open-source software tools and datasets around this topic on top of off-the-shelf hardware (still in use in my office).

MPEG-DASH

The latest project I’m working on is MPEG-DASH where I’ve also co-founded bitmovin, now a successful startup offering fastest transcoding in the cloud (bitcodin) and high quality MPEG-DASH players (bitdash). It all started when MPEG asked me to chair the evaluation of call for proposals on HTTP streaming of MPEG media. We then created dash.itec.aau.at that offers a huge set of open source tools and datasets used by both academia and industry worldwide (e.g., listed on DASH-IF). I think I can proudly state that this is the most successful MPEG activity I've been involved so far... (note: a live deployment can be found here which shows 24/7 music videos over the Internet using bitcodin and bitdash).

DASH and QuASE are also part of my habilitation which brought me into the current position at Alpen-Adria-Universität Klagenfurt as Associate Professor. Finally, one might ask the question, was it all worth spending so much time for MPEG and at MPEG meetings. I would say YES and there are many reasons which could easily results in another blog post (or more) but it’s better to discuss this face to face, I'm sure there will be plenty of possibilities in the (near) future or you come to Klagenfurt, e.g., for ACM MMSys 2016 ...

Monday, September 14, 2009

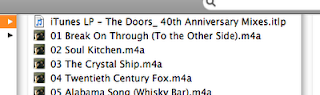

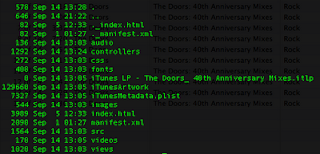

iTunesLP: The "first" (MPEG-21) Digital Item?

However, what's behind is a simple directory structure comprising an index.html linking to a set of CSS files (css) and JavaScript files (controllers and src). Furthermore, the directory structure includes links to fonts, images, and videos required for the presentation of the LP and an Apple .plist file. The views directory provides something like the sub-pages from an album's booklet.

However, what's behind is a simple directory structure comprising an index.html linking to a set of CSS files (css) and JavaScript files (controllers and src). Furthermore, the directory structure includes links to fonts, images, and videos required for the presentation of the LP and an Apple .plist file. The views directory provides something like the sub-pages from an album's booklet. That is, the iTunesLP is basically a Web site (i.e., HTML+CSS+JavaScript) and iTunes becomes a ordinary Web browser? Hmm, finally I tried to transfer the iTunesLP to the iPhone but it seems that the iPhone cannot play the album in the same way iTunes does.

That is, the iTunesLP is basically a Web site (i.e., HTML+CSS+JavaScript) and iTunes becomes a ordinary Web browser? Hmm, finally I tried to transfer the iTunesLP to the iPhone but it seems that the iPhone cannot play the album in the same way iTunes does.On the other hand, is it possible to realize the functionality provided by iTunesLP with MPEG-21 tools? I would say "Yes, it can"! In particular, the relationship between the various assets (text, images, videos, etc.) can be described using Digital Item Declaration and Digital Item Identification is used to identify the whole album and individual tracks. The interaction can be achieved by Digital Item Processing which enables the inclusion of ECMAScript (JavaScript) within a Digital Item. Finally, the File Format provides means to package the digital item into a single file - based on the ISO base media file format and compatible to .mp4 - enabling easy transaction among users within the whole value network.

So, is the iTunesLP a digital item? - Yes! Is the iTunesLP compatible with an MPEG-21 Digital Item - No (it is not compliant to MPEG-21) / Yes (it can be realized using MPEG-21). Additionally, MPEG-21 provides means to package a Digital Item into a single file and it is interoperable.

Tuesday, August 18, 2009

Second Amendment to "Most Wanted: MPEG-21 Industry Adoptions"

Wow, I'm happy that the list is growing and I'll soon issue a second edition of the MPEG-21 industry adoptions.

Wednesday, July 29, 2009

Amendment to "Most Wanted: MPEG-21 Industry Adoptions"

Monday, July 27, 2009

Most Wanted: MPEG-21 Industry Adoptions

- The Universal Plug and Play (UPnP) forum adopted the concept of MPEG-21 Digital Items and defined DIDL-Lite as part of their ContentDirectory:2 Service Template. However, it is a derivation from a subset of the MPEG-21 DIDL. Within UPnP it is used as container format which has been enhanced with UPnP-specific data such as media resource attributes and Dublin Core metadata.

- The abstract Digital Item model has been adopted within Microsoft’s Interactive Media Manager (IMM) and implemented using the Web Ontology Language (OWL). It uses Dublin Core but also allows for the inclusion of domain-specific metadata (e.g., IPTC, EXIF, XMP, SMPTE, etc) or custom ontology predicates. Interestingly, IMM also adopts Part 3 of MPEG-21 – Digital Item Identification (DII) – which allows for uniquely identifying Digital Items and parts thereof. Unfortunately, Microsoft has discontinued the IMM solution.

- Several EC-funded projects (e.g., DANAE, AXMEDIS, ENTHRONE) have adopted a wide range of MPEG-21 technologies and provided reference applications on top if it.

- A rather uncommon adoption of MPEG-21 - since it is not in the 'core multimedia area' - is for the representation of complex digital objects in the Los Alamos National Laboratory Digital.Library. See here and here for details.

- The Digital Media Project (DMP) adopted a wide range of MPEG-21 technologies mainly focusing on digital rights management. See the digital media manifesto for background information about DMP.

- Of course, there are a wide range of 'MPEG internal' adoptions: MPEG-21 IPMP Components defines its own syntax enabling the declaration of protected Digital Items; some MPEG Multimedia Application Formats (MAFs) make use of Digital Items; the MPEG Extensible Middleware (MXM) defines an API to provide access to MPEG-21 technologies (among others).

Sunday, June 21, 2009

Why do we need a Content‐Centric Future Internet?

Executive Summary

The aim of this document is twofold: firstly, to report and analyse the main reasons, which support our claim that the Future Internet will be “Content‐Centric” and secondly to define two alternative solutions for a Future Content‐Centric Internet Architecture following an evolutionary and a clean‐slate approach.

The starting point of our discussion is the reasonable hypothesis that Future Internet will mainly simplify the usability, increase the efficiency, secure the privacy and enhance the media experience of the users (enhanced mobility, really broadband & flexible communications, immersion, enhanced interaction, involvement of all senses and emotions, navigation). New ways of media creation and consumption will emerge, aiming to cover the different human needs and preserve the revenue generation of the various stakeholders. Moreover, new content types will appear, which together with efficient handling, delivery and protection of the content (i.e. static or dynamic, pre‐recorded, cached or live) will be the Future Internet cornerstones. Thus, the content/media and its efficient handling are (in) the heart of the Future Internet.

Taking into account the fact that the current Internet cannot efficiently serve the increasing needs and the foreseen requirements, two Content‐Centric Internet Architectures are proposed: a “Logical Content‐Centric Architecture”, which consists of different virtual hierarchies of nodes with different functionality and an “Autonomic Content‐Centric‐Internet Architecture”, which relies on the completely novel concept of the “content object”.

Yet, the major objective of this position paper is to initiate a debate between all the interested stakeholders with respect to the following three fundamental arguments:

- Will the Future Internet be Content‐Centric?

- How a potential Content‐Centric Internet Architecture would look like?

- Which design principles and requirements would govern such Architecture?

Tuesday, May 5, 2009

MPEG Press Release: MPEG Explores New Technologies for High Performance Video Coding (HVC)

Maui, Hawaii, USA – The 88th MPEG meeting was held in Maui, Hawaii, USA from the 20th to the 24th of April 2009.

Highlights of the 88th Meeting

Call for Evidence of Technologies Issued for HVC

Technology evolution will soon make possible the capture and display of video material with a quantum leap in quality when compared to the quality of HDTV. However, networks are already finding it difficult to carry HDTV content to end users at data rates that are economical. Therefore, a further increase of data rates, such as soon will be possible, will put additional pressure on the networks. For example:

· High-definition (HD) displays and cameras are affordable for consumer usage today, while the currently available internet and broadcast network capacity is not sufficient to transfer large amount of HD content economically. While this situation may change slowly over time, the next generation of ultra-HD (UHD) contents and devices, such as 4Kx2K displays for home cinema applications and digital cameras, are already appearing on the horizon.

· For mobile terminals, lightweight HD resolutions such as 720p or beyond will be introduced to provide perceptual quality similar to that of home applications. Lack of sufficient data rates as well as the prices to be paid for transmission will remain a problem for the long term.

MPEG has concluded that video bitrate (when current compression technology is used) will go up faster than the network infrastructure will be able to carry economically, both for wireless and wired networks. Therefore a new generation of video compression technology with sufficiently higher compression capability than the existing AVC standard in its best configuration (the High Profile), is needed. Such High-Performance Video Coding (HVC) would be intended mainly for high quality applications, by providing performance improvements in terms of coding efficiency at higher resolutions, with applicability for entertainment-quality services such as HD mobile, home cinema and Ultra High Definition (UHD) TV.

To start a more rigorous assessment about the feasibility of HVC, a Call for Evidence has been issued, with the expectation that responses would report about the existence of technologies that would be able to fulfill the aforementioned goals. A set of appropriate test materials and rate points that would match the requirements of HVC application scenarios has been defined. Responses to this call will be evaluated at the 89th MPEG meeting in July 2009. Depending on the outcome of this Call for Evidence, MPEG may issue a Draft Call for Proposals by the end of its 89th meeting. The Call for Evidence can be found as document ISO/IEC JTC1/SC29/WG11 N10553 at http://www.chiariglione.org/mpeg/hot_news.htm

MPEG Seeks Technologies to link Real and Virtual Worlds

At its 88th meeting, MPEG has published updated requirements (ISO/IEC JTC1/SC29/WG11 N10235) and issued an extended call for proposals (ISO/IEC JTC1/SC29/WG11 N10526) for an extension of the Media Context and Control project (ISO/IEC 23005 or MPEG-V) to standardize intermediate formats and protocols for the exchange of information between the (real) physical and virtual worlds. In particular, this extended call for proposals seeks technologies related to haptics and tactile, emotions, and virtual goods. Specifically, the goal of this project (formally called Information Exchange with Virtual Worlds) is to provide a standardized global framework and associated data representations to enable the interoperability between different virtual worlds (e.g. a digital content provider of a virtual world, a game with the exchange of real currency, or a simulator) and between virtual worlds and the real world (sensors, actuators, robotics, travel, real estate, or other physical systems). MPEG invites all parties with relevant technologies to submit these technologies for consideration. For more information, refer to the above documents, which are available at http://www.chiariglione.org/mpeg/hot_news.htm.

Digital Radio Service to be Extended with new BIFS

At its 88th meeting, MPEG has been informed by the digital radio industry of the increasing need for a new interactive BInary Format for Scenes (BIFS) service for digital radio. This new service will enable the presentation of supplemental information like EPG or advertisements on radios with displays capable of supporting this service. In addition, such displays may be used for controlling the radio.

In order to fulfill the additional requirements for this new service, MPEG has issued a Call for Proposals for new BIFS technologies in N10568. The result of this call will be used to define a new amendment for BIFS and a profile, including the new technologies, backward compatible with Core2D@level1.

The requirements for Interactive Services for Digital Radio can be found in document ISO/IEC JTC1/SC29/WG11 N10567 available at http://www.chiariglione.org/mpeg/hot_news.htm.

New Presentation Element Added to Multimedia Framework

The MPEG-21 Multimedia Framework already provides flexible and efficient ways to package multimedia resources and associated metadata in a structured manner. At its 88th meeting, MPEG advanced to the formal approval stage a new amendment to MPEG-21 (ISO/IEC JTC1/SC29/WG11 21000-2 PDAM 1 Presentation of Digital Item) to define a new element that can be used to provide information relevant to the presentation of multimedia resources. Specifically, the new element, called Presentation, will describe multimedia resources in terms of their spatio-temporal relationships and their interactions with users. In a related effort, MPEG also began the formal approval process for another amendment to MPEG-21 (ISO/IEC JTC1/SC29/WG11 21000-4 PDAM 2 Protection of Presentation Element) so that the new Presentation element can be associated with the Intellectual Property Management and Protection (IPMP) element for content protection and management.

Other Notable MPEG Events

MPEG Plans First MXM Developer’s Day

The first International MPEG Extensible Middleware (MXM) Developer’s Day will be held on 30 June at the Queen Mary University, London, U.K. The purpose of this event is to share with the software developer’s community the state of the art and the prospects of MPEG Extensible Middleware, a standard designed to promote the extended use of digital media content through increased interoperability and accelerated development of components, solutions, and applications. The event is free of charge. For more information, or to register, visit http://mxm.wg11.sc29.org.

MMT Workshop Targets Requirements for Streaming of MPEG Content

The Workshop for MPEG Media Transport (MMT) will be held on 1 July during the 89th MPEG meeting at the Queen Mary University in London, U.K. The purpose of this event is to gather new requirements, use cases, and contributions related to the transport of multimedia content over heterogeneous networks. In particular, MPEG is gathering information on current limitations of available standards in the area of media streaming and associated challenges in emerging network environments. The MMT workshop is also free of charge. For more information, visit http://www.chiariglione.org/mpeg/hot_news.htm

Communicating the large and sometimes complex array of technology that the MPEG Committee has developed is not a simple task. The experts past and present have contributed a series of white-papers that explain each of these standards individually. The repository is growing with each meeting, so if something you are interested is not there yet, it may appear there shortly – but you should also not hesitate to request it. You can start your MPEG adventure at: http://www.chiariglione.org/mpeg/mpeg-tech.htm

Future MPEG meetings are planned as follows:

For further information about MPEG, please contact:

mailto:leonardo(at)chiariglione(dot)org

or

This press release and other MPEG-related information can be found on the MPEG homepage:

http://www.chiariglione.org/mpeg

The text and details related to the Calls mentioned above (together with other current Calls) are in the Hot News section, http://www.chiariglione.org/mpeg/hot_news.htm. These documents include information on how to respond to the Calls.

The MPEG homepage also has links to other MPEG pages which are maintained by the MPEG subgroups. It also contains links to public documents that are freely available for download by those who are not MPEG members. Journalists that wish to receive MPEG Press Releases by email should contact Dr. Arianne T. Hinds using the contact information provided above.

Tuesday, April 21, 2009

Note Published: W3C Personalization Roadmap: Ubiquitous Web Integration of AccessForAll 1.0

They probably should also include the work of ISO/IEC JTC 1/SC 29/WG 11 (MPEG) on Usage Environment Description (UED) which also provides means to describe user characteristics including accessibility information. UED has been standardized within Part 7 of MPEG-21, entitled Digital Item Adaptation (DIA). The UED Schema can be found here and just search for AuditoryImpairment or VisualImpairment.

Friday, March 6, 2009

Multimedia Delivery in the Future Internet

At the beginning it provides an overview of the market environment & business motivations before introducing the multimedia content in the future internet, namely 3D content, multi-view video coding, H.265, and MPEG/LaSER. This was the time when HVC was not yet born.

Next, converged networks are presented followed by cross-layer adaptation for enriched perceived Quality of Service (PQoS). I contributed to the latter, specifically with cross-layer optimization/adaptation techniques and how MPEG-21 could help to increase the level of interoperability.

Finally, this white paper also describes means for multimedia rights management.

Wednesday, September 3, 2008

Workshop Program: Many Faces of Multimedia Semantics

8:30 - 10:00am – Keynote Talk: Dr. Alberto del Bimbo

Session Chair: William I. Grosky

Learning Ontology Rules for Semantic Video Annotation

Marco Bertini, Alberto Del Bimbo, Giuseppe Serra

10:00 - 10:30am – Coffee Break

10:30am- 12:00 – Session 1: Annotation: Ad-Hoc and Standards-Based

Session Chair: Farshad Fotouhi

Chants and Orcas: Semi-Automatic Tools for Audio Annotation and Analysis in Niche Domains

Steven Ness, Matthew Wright, Luis Gustavo Martins, George Tzanetakis

The Semantics of MPEG-21 Digital Items Revisited

Christian Timmerer, Maria Teresa Andrade, Pedro Carvalho, Davide Roagi, Giovanni Cordara

Multimedia Knowledge Management Using Ontologies

Antonio Penta, Antonio Picariello, Letizia Tanca

12:00 - 1:30pm – Lunch

1:30 - 3:00pm – Session 2: User-Based and Event Semantics

Session Chair: Giuseppe Amato

Affective Ranking of Movie Scenes Using Physiological Signals and Content Analysis

Mohammad Soleymani, Guillaume Chanel, Joep Kierkels, Thierry Pun

An Exploratory Study on Joint Analysis of Visual Classification in Narrow Domains and the Discriminative Power of Tags

Oge Marques, Mathias Lux

Spatio-Temporal Query for Multimedia Database

Sujal Wattamwar, Hiranmay Ghosh

3:00 - 3:30pm – Coffee Break

3:30 - 4:30pm – Session 3: Short Papers and Demos

Session Chair: Peter Stanchev

Employing a Photo’s Life Cycle for Multimedia Retrieval

Philipp Sandhaus, Susanne Boll

Use of Weighted Visual Terms for Machine Learning Techniques for Image Content Recognition Relying on MPEG-7 Visual Descriptors

Giuseppe Amato, Pasquale Savino

Tuesday, August 26, 2008

The Semantics of MPEG-21 Digital Items Revisited

Abstract

The MPEG-21 standard forms a comprehensive multimedia framework covering the entire multimedia distribution chain. In particular, it provides a flexible approach to represent, process, and transact complex multimedia objects which are referred to as Digital Items (DIs). DIs can be quite generic, independent of the application domain, and can encompass a diversity of media resources and metadata. This flexibility has an impact on the level of interoperability between systems and applications, since not all the functionality needs to be implemented. Furthermore, additional semantic rules may be implemented through the processing of the Digital Item which is possibly driven by proprietary metadata. This jeopardizes interoperability and consequently raises barriers to the successful achievement of augmented and transparent use of multimedia resources. In this context, we have investigated and evaluated the interoperability at the semantic level of Digital Items throughout the automated production, delivery and consumption of complex multimedia resources in heterogeneous environments. This paper describes the studies conducted, the experiments performed, and the conclusions reached towards that goal.Full reference

Christian Timmerer, Maria Teresa Andrade, Pedro Carvalho, Davide Rogai, and Giovanni Cordara, "The Semantics of MPEG-21 Digital Items Revisited", Proceedings of ACM Multimedia 2008 2nd International Workshop on the Many Faces of Multimedia Semantics, Vancouver, Canada, October 27 - November 1, 2008.Wednesday, July 16, 2008

Keynote@TEMU'08: MPEG-21 for Integrated E2E Management enabling QoS

A summary is given below. Interestingly, a question from the auditorium was whether these description formats are somehow used by the IP Multimedia Subsystem (IMS). The answer was no as there are currently no standardized means to transport MPEG metadata (this is true for both MPEG-7 and MPEG-21) using IETF-based protocols. However, there have been projects transporting these metadata formats, e.g., using HTTP, SDP(ng), RTP, etc., but this has not been recognized by the IETF so far or proponents of these implementations were not able to bring them to the appropriate working groups of IETF (for whatever reasons...).

A summary is given below. Interestingly, a question from the auditorium was whether these description formats are somehow used by the IP Multimedia Subsystem (IMS). The answer was no as there are currently no standardized means to transport MPEG metadata (this is true for both MPEG-7 and MPEG-21) using IETF-based protocols. However, there have been projects transporting these metadata formats, e.g., using HTTP, SDP(ng), RTP, etc., but this has not been recognized by the IETF so far or proponents of these implementations were not able to bring them to the appropriate working groups of IETF (for whatever reasons...).Summary/Abstract of my talk [PDF]

The information revolution of the last decade has resulted in a phenomenal increase in the quantity of content (including multimedia content) available to an increasing number of different users with different preferences who access it through a plethora of devices and over heterogeneous networks. End devices range from mobile phones to high definition TVs, access networks can be as diverse as GSM and broadband networks, and the various backbone networks are different in bandwidth and Quality of Service (QoS) support. In addition, users have different content/presentation preferences and intend to consume the content at different locations, times, and under altering circumstances.

In order to become the vision as indicated above reality substantial research and standardization efforts have been undertaken which are collectively referred to as Universal Multimedia Access (UMA). An important and comprehensive standard in this field is the MPEG-21 Multimedia Framework, formally referred to as ISO/IEC 21000. The aim of MPEG-21 is to enable transparent and augmented use of multimedia resources across a wide range of networks, devices, user preferences, and communities, notably for trading (of bits). In particular, it shall enable the transaction of Digital Items among Users. A Digital Item is defines as a structured digital object with a standard representation and metadata and is the fundamental unit of transaction and distribution within the MPEG-21 multimedia framework. A User (please note the upper case “U”) is defined as any entity that interacts within this framework or makes use of Digital Items. The MPEG-21 standard currently comprises 17 parts which can be clustered into six major categories each dealing with different aspect of the Digital Items: declaration (and identification), digital rights management, adaptation, processing, systems, and miscellaneous aspects (i.e., reference software, conformance, etc.). The talk will present and review these concepts with the emphasize on providing universal access to multimedia contents independent of the User's location, time, and other usage environment conditions.

Several projects funded by the European Commission (EC) – among them are DANAE and ENTHRONE (blog) worth to mention – have implemented and integrated (parts of) the MPEG-21 standard in order to demonstrate its feasibility. The aim of the DANAE Specific Targeted Research Project (STREP) was to develop scalable coding formats and an MPEG-21-based end-to-end architecture comprising a server, client, and adaptation node (all MPEG-21-enabled) which allows for dynamic and distributed adaptation of scalable media formats. On the other hand, the objectives of the ENTHRONE Integrated Project (IP) are to provide an integrated management solution enabling QoS within heterogeneous environments based on MPEG-21 and to demonstrate the ENTHRONE solution in a large-scale pilot. Therefore, the talk will review ENTHRONE's contribution to the UMA issue and will demonstrate how the MPEG-21 concepts are adopted on a broader scale.

Wednesday, July 2, 2008

Interoperable SVC Streaming featuring MPEG-21 DIA at ICME'08 Demo Track

Interestingly, the demo provides thanks to the optimizations of the reference software a superior performance compared to other SVC player implementations. A lot of people were impressed that the sequences were shown smoothly on a "casual" laptop without any delays or faulty pictures.

Interestingly, the demo provides thanks to the optimizations of the reference software a superior performance compared to other SVC player implementations. A lot of people were impressed that the sequences were shown smoothly on a "casual" laptop without any delays or faulty pictures.The 2-pager (demo description) is available here.

Wednesday, April 30, 2008

MPEG news: a report from the 84th meeting in Archamps, France

MPEG Representation of Sensory Effects

I've reported on that recently and this topic is maturing to a Call for Proposals (CfP) and the proposed technologies will be evaluated during the July meeting in Hannover, Germany. In this CfP MPEG is requesting technologies for the following items to be standardized:

- Sensory Effect Metadata: description schemes and descriptors representing Sensory Effects.

- Sensory Device Capabilities and Commands: description schemes and descriptors representing characteristics of Sensory Devices and means to control them.

- User Sensory Preferences: description schemes and descriptors representing user preferences with respect to rendering of sensory effects.

Microsoft has adopted MPEG-21 technology within their Interactive Media Manager that is a collaborative media management solution that extends Microsoft Office SharePoint® Server 2007 for media and entertainment companies. Interestingly, their have adopted the Digital Item Model - an abstract model expressed in EBNF - and defined their own implementation of this model using RDF/OWL. Note that MPEG's implementation of the model is called Digital Item Declaration Language (DIDL) which is based on XML Schema.

Note: also UPnP has adopted MPEG-21 DIDL but in some kind of dialect called DIDL-lite.

Multimedia Extensible Middleware (MXM)

The Multimedia Extensible Middleware (MXM) aims to define APIs for various purposes. The requirements and also a Call for Proposals (requirements) have been issued at this meeting. It's very interesting to see that it should also accommodate for peer-to-peer technologies, i.e., storage/consumption of content in a distributed environment (P2P infrastructure based, e.g., distributed hash tables).

Other issues discussed at the meeting

- Presentation of Structured Information: For this item a Call for Proposals (requirements) has been issued. One use cases and overall model is to include a presentation description into Digital Items that upon receipt of a Digital Item automatically extracts this information asset which is used to present the Digital Item.

- MPEG User Interface Framework: personalize, adaptable and exchangeable rich user interfaces based on the use of a presentation format (BIFS, LaSER, ...), a language for describing the personalization context (UED, CC/PP, DCO, ...), and home network protocols (DLNA, UPnP, ...).

- MPEG-V: This new project item defines interfaces between virtual worlds and between virtual worlds and the real world. An extended call for requirements has been issued.

- Multimedia Value Chain Ontology (MVCO): aims to define an ontology for the whole multimedia value chain and a Call for Proposals (requirements) has been issued. However, the main focus is on rights-related issues at this moment.

Saturday, June 23, 2007

The MPEG-21 Multimedia Framework

The aim of the MPEG-21 standard, the so-called Multimedia Framework, is to enable transparent and augmented use of multimedia resources across a wide range of networks, devices, user preferences, and communities, notably for trading (of bits). As such, it provides the next step in MPEG's standards evolution, i.e., the transaction of Digital Items among Users.

A Digital Item is a structured digital object with a standard representation and metadata. As such, it is the fundamental unit of transaction and distribution within the MPEG-21 multimedia framework. In order words, it aggregates multimedia resources together with metadata, licenses, identifiers, intellectual property management and protection (IPMP) information, and methods within a standardized structure.

A User (please note the upper case “U”) is defined as any entity that interacts within this framework or makes use of Digital Items. It is important to note that Users may include individuals as well as communities, organizations, or governments, and that Users are not even restricted to humans, i.e., they may also include intelligent software modules such as agents.

The MPEG-21 standard currently comprises 18 parts which can be clustered into six major categories each dealing with different aspect of the Digital Items: declaration (and identification), digital rights management, adaptation, processing, systems, and miscellaneous aspects (i.e., reference software, conformance, etc.):

- Declaration and identification: for declaring Digital Items MPEG-21 adopted XML Schema to define the Digital Item Declaration Language (DIDL). An XML document conforming to DIDL is called Digital Item Declaration (DID) which defines the structure of the Digital Item, i.e., how resources and metadata relates to each other. Furthermore, MPEG-21 provides means for uniquely identifying DIs and parts thereof. However, it is important to emphasize that MPEG-21 does not define yet another identification scheme; in fact, it facilitates existing schemes such as International Standard Book Number (ISBN) or International Standard Serial Number (ISSN) and specifies means for establishing a registration authority for Digital Items.

- Digital rights management: how to include IPMP information and protected parts of Digital Items in a DIDL document is specified in part 4 of MPEG-21. It deliberately does not include protection measures, keys, key management, trust management, encryption algorithms, certification infrastructures or other components required for a complete DRM system. Rights and permissions on digital resources in MPEG-21 can be defined as the action (or activity) or a class of actions that a principal may perform on or using the associated resource under given conditions, e.g., time, fee, etc. Rights and permissions are accompanied by a set of clear, consistent, structured, integrated, and uniquely identified terms within a well-structured, extensible dictionary.

- Adaptation of Digital Items: is a vital and comprehensive part within MPEG-21 and with regard to Universal Multimedia Access. It is referred to as Digital Item Adaptation (DIA), which specifies normative descriptions tools to assist with the adaptation of Digital Items. In particular, the DIA standard specifies means enabling the construction of device and coding format independent adaptation engines. Note that only tools used to guide the adaptation engine are specified by DIA while the adaptation engines themselves are left open to industry competition.

- Processing of Digital Items: includes methods within the statically declared Digital Items that are usually presented to the User for selection. These methods are written in ECMAScript and may utilize Digital Item Base Operations (DIBOs) which are analogous to a standard library of functions of a programming language. However, also bindings to other programming languages (currently Java and C++) have been defined.

- MPEG-21 Systems: include a file format that forms the basis of an interoperable exchange of Digital Items (as files). MPEG's binary format for metadata (BiM) has been adopted as an alternative schema-aware XML serialization format which adds streaming capabilities to XML documents, among other useful features. Finally, this category defines how to map Digital Items on various transport mechanisms (MPEG-2 TS, RTP, etc.) whereby various parts of Digital Items can be streamed over different channels.

Further readings:

- Part 2: Digital Item Declaration

- Part 3: Digital Item Identification

- Part 4: Intellectual Property Management and Protection Components

- Part 5: Rights Expression Language

- Part 6: Rights Data Dictionary

- Part 7: Digital Item Adaptation and Session Mobility

- Part 8: Reference Software

- Part 9: File Format

- Part 10: Digital Item Processing and C++ bindings

- Part 11: Evaluation Tools for Persistent Association

- Part 15: Event Reporting

- Part 17: Fragment Identification for MPEG Resources

- Part 18: Digital Item Streaming

Publicly available MPEG-21 standards: